Imagine driving a car, just like you do now, and using the steering wheel to direct where the car goes. Now, imagine driving a car using nothing more than your mind. Hands-free control of autonomous systems is fast becoming a reality – and a UTS research project is leading the way.

A collaboration between Professor Francesca Iacopi and Distinguished Professor Chin-Teng Lin in the Faculty of Engineering and IT, the work has produced a novel brain-computer interface that uses human brainwaves to control machines.

The research, which is funded by $1.2 million from the Defence Innovation Hub, has three main components: a biosensor to detect electrical signals from the brain, a circuit to amplify the signals, and an artificial intelligence decoder to translate them into instructions – stop, turn right, turn left – that the machine can understand.

“I see this technology as the next generation of human-computer interfaces,” says Distinguished Professor Lin.

Our technology can translate the brain’s electrical signals into a format that can be caught directly by a machine or a robot and the robot will follow the commands.

- Distinguished Professor CT Lin

Next step in carbon-based biosensing

Professor Iacopi, a leading researcher in nanotechnology, is leading the development of the biosensor, which is worn on the head. The sensor is made of epitaxial graphene – essentially multiple layers of very thin, very strong carbon – grown directly onto a silicon carbide on silicon substrate.

The result is a novel biosensor that overcomes three major challenges of graphene-based biosensing: corrosion, durability, and skin contact resistance, where non-optimal contact between the sensor and skin impedes the detection of electrical signals from the brain.

“We’ve been able to combine the best of graphene, which is very biocompatible and very conductive, with the best of silicon technology, which makes our biosensor very resilient and robust to use,” says Professor Iacopi.

Using brainwaves to control machines

Distinguished Professor Lin is working on the amplification circuit and the AI brain decoding technology. He and his team have achieved two major breakthroughs in their work so far.

The first was figuring out how to minimise the noise created by the body or the surrounding environment so that the technology can be used in real-world settings. The second was increasing the number of commands that the decoder can deliver within a fixed period of time.

“Current BCI technology can issue only two or three commands such as to turn left or right or go forward,” Professor Lin says.

“Our technology can issue at least nine commands in two seconds. This means we have nine different kinds of commands and the operator can select one from those nine within that time period.”

Revolutionising human-computer interactions

Together, the researchers have produced a prototype brain-computer interface that has huge potential for application across multiple industries.

In a defence context, the technology offers the ability for the Australian Army to explore how soldiers interact with robotic systems during tactical missions. At present, soldiers must look at a screen and use their hands to operate robotic platforms, when they could be looking up and able to support their team differently.

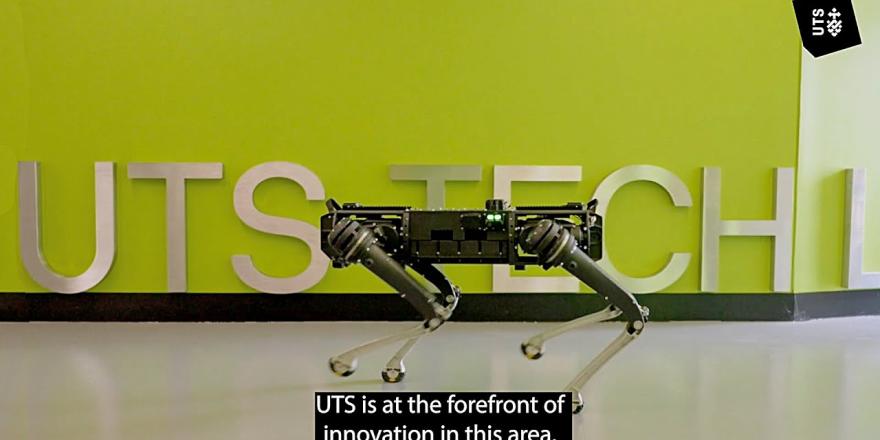

Lieutenant Colonel Kate Tollenaar is the project lead with the Australian Army.

“We are exploring how a soldier can operate an autonomous system – in this example, a quadruped robot – with brain signals, which means the soldier can keep their hands on their weapon, potentially enhancing their performance as highlighted in the Army Robotic and Autonomous Systems Strategy,” she says.

“This brain-computer interface explores how a robotic platform responds in a real-world environment to support operations by a soldier.”

Elsewhere, the technology could offer significant value in industrial, medical and disability sectors.

Research team

-

Faculty of Engineering and IT

-

Faculty of Engineering and IT

-

Faculty of Engineering and IT

-

Faculty of Engineering and IT