What does a smile sound like? It’s a question that’s preoccupying some of the world’s leading experts in the fields of machine and human perception — and now, it’s driving the development of next-generation bionic glasses for people who are blind or low vision.

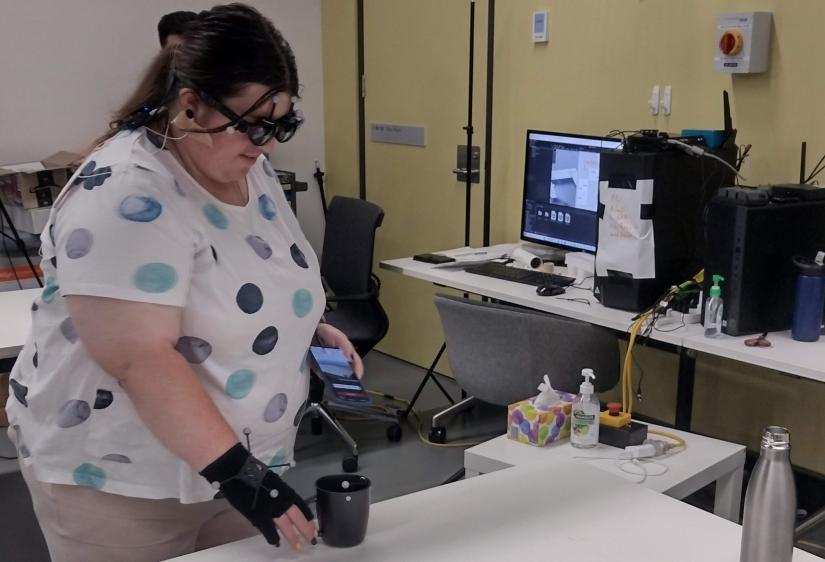

Called ARIA, the wearable technology has emerged from years of painstaking work by Sydney start-up ARIA Research and researchers from the University of Technology Sydney and the University of Sydney.

Co-designed in partnership with the blind community, including echolocation expert Daniel Kish, ARIA combines visual perception, spatial understanding and proprioceptive coordination to help people ‘see’ through sound.

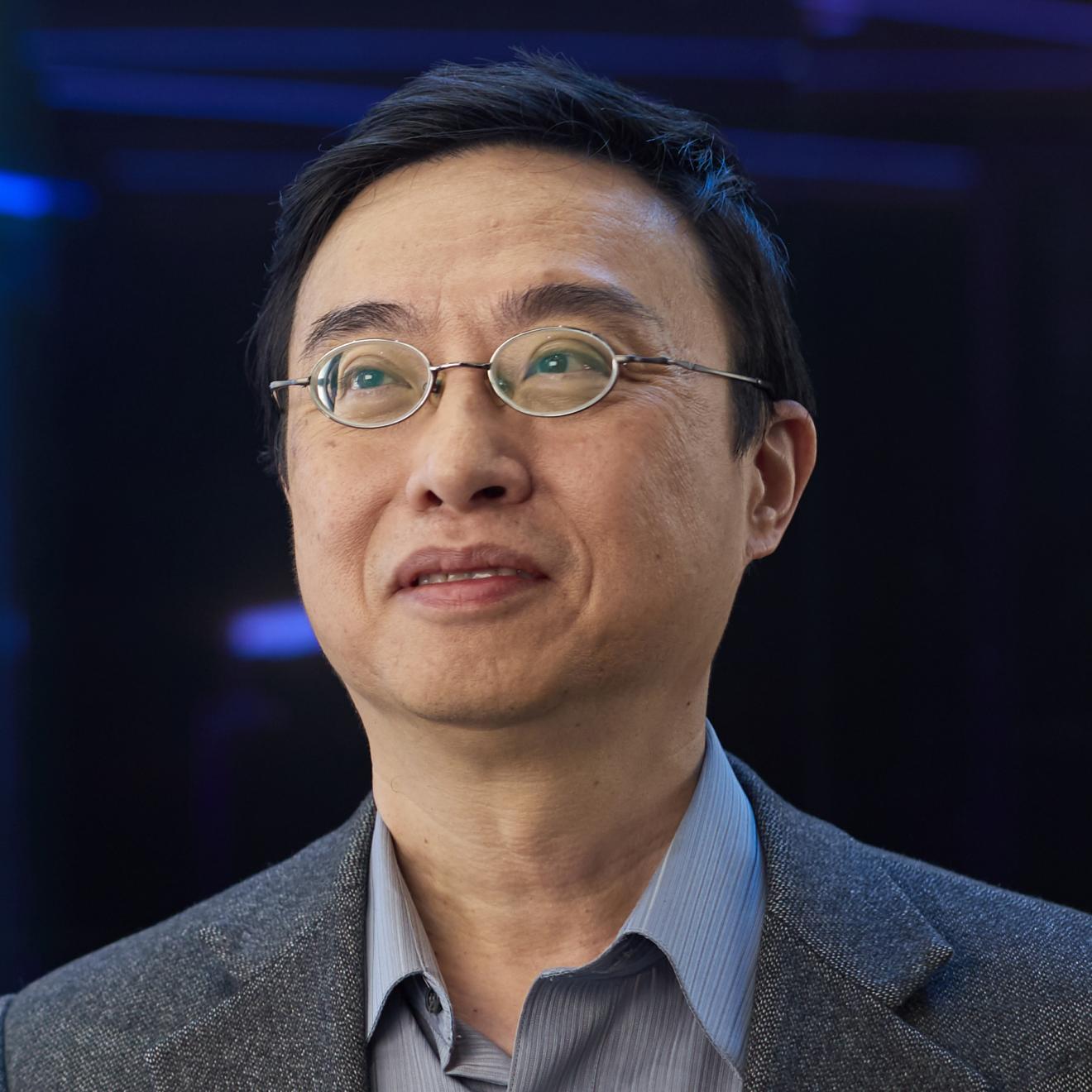

“We want to help the user to understand the environment in two major ways: what does the environment look like, and then what are the specific objects within it?” says UTS research Professor Chin-Teng Lin, a global leader in brain-computer interface research who’s leading the project’s human perception team.

Vision via sound

ARIA is a non-invasive device that can be worn like ordinary glasses. It’s equipped with a camera and other sensors that collect data about the surrounding environment and objects within it; this information is transmitted via audio cues that help users visualise the world around them.

Imagine an ARIA user arriving for coffee at a friend’s house. ARIA’s audio information explains the layout and the user’s position within the space, enabling them to navigate the environment independently.

When they sit down on the couch, the system delivers audio cues for each object on the coffee table in front of them — for example, the cue for a phone might be the buzz we associate with a phone vibrating, while the cue for a plant might be the sound of leaves rustling in the breeze.

The volume of the cues indicates the user’s proximity to each object, which means they can reach out and touch them based on their understanding of their own position in space. If they turn their body, the audio signals and volume adjust to reflect the change in position. The technology will also describe other aspects of the environment within its line of sight.

This is what’s called ‘sensory augmentation’, and it’s ARIA’s key point of difference in the assistive technology space: the device enhances users’ other senses — specifically hearing and touch — to help render world around them.

A symphony of sound

While the specifics of ARIA’s audio language are currently a tightly held secret, Chief Product Officer and co-founder Mark Harrison says it draws in part on the cadence and structure of orchestral music.

“When two people are talking on top of each other, it’s very difficult to understand — but we have no problem listening to a symphony and hearing the strings on top of the woodwinds on top of the percussion,” he says.

It’s an elegant concept, but translating it into usable technology is a complex process. One of the major challenges for Professor Lin’s team is creating a language system that can be adjusted to each user’s ‘mental workload’ — that is, how hard the brain is working to process information.

As such, the research team is developing a sensory solution for use on the glasses that will measure various markers of mental workload, such as heart or breath rate variability, and adjust the volume of information the system transmits to optimise the user experience.

“If the user is experiencing higher mental workload, we’ll provide as simple information as possible. If they’re experiencing low mental workload, we can provide more information so they can understand the environment more deeply,” Professor Lin says.

Making the impossible possible

To date, the researchers have produced a prototype that can perceive and identify up to 120 objects and help users engage in a range of wayfinding and avoidance activities. A pilot clinical trial of the technology is scheduled for later this year.

But the work is far from done. Additional features, including understanding the environment from topographical and symbolic perspectives, are next on the list, as is the holy grail: non-verbal communication.

This idea, which would see the technology identify and communicate gestures such as nodding, eye contact and facial expressions, was inspired by one of ARIA’s early testers.

“He said, ‘Rob, I’m 38 years old. I’ve got two kids in their teens. I can always hear them fight, but I can never hear them smile,’” says ARIA CEO and co-founder Robert Yearsley.

“And that kinda got me. I thought well, we should do that.”

The research is funded by the Australian Government’s BioMedTech Horizons 4 Programme and the Cooperative Research Centre Projects (CRC-P) scheme.

Research team

-

Co-Director, Australian Artificial Intelligence Institute, UTS

-

Research Director, Robotics Institute, UTS

-

Lecturer, School of Computer Science, UTS

-

Lecturer, Orthoptics, UTS

-

Research Associate, UTS

-

Head, Computing and Audio Research Lab, University of Sydney

-

Senior Lecturer, School of Aerospace, Mechanical and Mechatronic Engineering, University of Sydney

-

Senior Lecturer, Sydney Institute for Robotics and Intelligent Systems, University of Sydney

-

Dr Wanli OuyangFaculty of Engineering, University of Sydney

-

CEO and Co-Founder, ARIA Research

-

Co-Founder and Chief Product Officer, ARIA Research