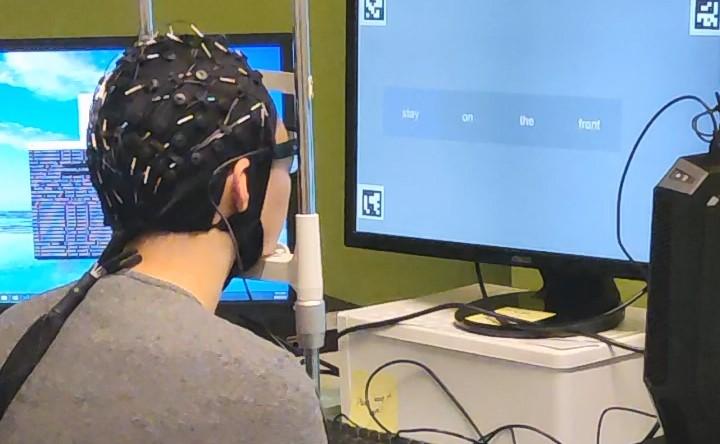

Unlike previous methods requiring invasive procedures or bulky MRI machines, this technology utilizes a cap to receive EEG signals, making it more practical for daily life.

UTS researcher tests new mind-reading technology. Image: UTS

Researchers at the University of Technology Sydney's GrapheneX-UTS Human-centric Artificial Intelligence Centre have achieved a groundbreaking milestone by developing a portable, non-invasive system capable of translating silent thoughts into text. This innovation has significant potential to facilitate communication for individuals unable to speak due to conditions such as stroke or paralysis. Additionally, it opens avenues for seamless interaction between humans and machines, including the operation of bionic arms or robots.

Led by Distinguished Professor CT Lin, the study, featuring first author Yiqun Duan and PhD candidate Jinzhou Zhou, has been selected as the spotlight paper at the prestigious NeurIPS conference, scheduled for December 12, 2023, in New Orleans.

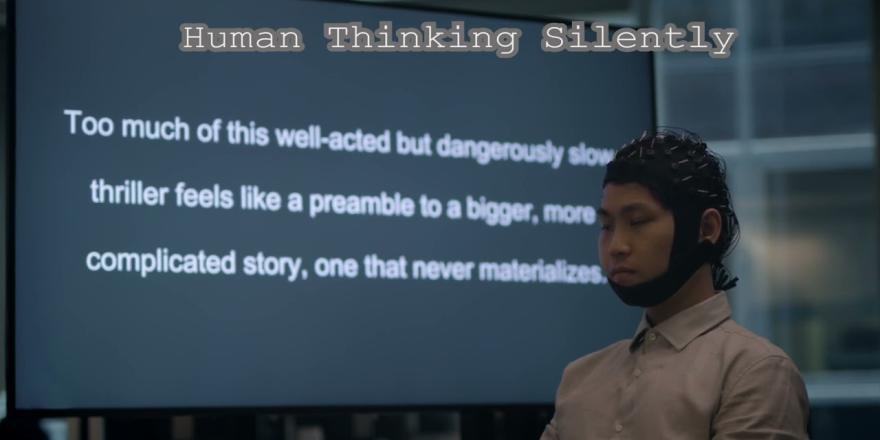

Participants in the study wore a cap recording electrical brain activity via electroencephalogram (EEG) while silently reading text passages. An AI model named DeWave, developed by the researchers, segmented EEG waves into distinct units capturing specific brain patterns. This raw EEG-to-language translation represents a pioneering effort, incorporating discrete encoding techniques and introducing an innovative approach to neural decoding. A demonstration of the technology can be seen in this video.

The EEG wave is segmented into distinct units that capture specific characteristics and patterns from the human brain. This is done by an AI model called DeWave developed by the researchers. DeWave translates EEG signals into words and sentences by learning from large quantities of EEG data.

Unlike previous methods requiring invasive procedures or bulky MRI machines, this technology utilizes a cap to receive EEG signals, making it more practical for daily life. The study, involving 29 participants, enhances the technology's adaptability and robustness compared to previous decoding methods tested on a limited number of individuals.

“This research represents a pioneering effort in translating raw EEG waves directly into language, marking a significant breakthrough in the field. It is the first to incorporate discrete encoding techniques in the brain-to-text translation process, introducing an innovative approach to neural decoding. The integration with large language models is also opening new frontiers in neuroscience and AI.

Distinguished Professor CT Lin.

This research builds upon UTS's previous brain-computer interface technology developed in collaboration with the Australian Defence Force, which utilized brainwaves to command a quadruped robot, which is demonstrated in this ADF video.